In our recent State of Content webinar, I observed that even though individual adoption of generative artificial intelligence (AI) (such as ChatGPT and Claude) has been lightning fast, enterprise adoption of generative AI has been slow. For instance, a recent survey published in CIO magazine found that, in the manufacturing industry alone, “security worries have tripled, accuracy concerns have grown fivefold, and transparency issues have quadrupled.” And Salesforce found that 41% of chief marketing officers say data exposure is a top concern with AI.

At the same time as realizing the types of risk, enterprises are gaining a deeper understanding of how pervasive content is and, therefore, how consequential any risk of applying generative AI to that content is. As Susanna Guzman, a seasoned content leader, reflected in a conversation for my latest edition of The Content Advantage:

One of the biggest opportunities that I see for generative AI is that business leaders who think through the tool’s risks and rewards can’t help but come to a deeper understanding of what content is, how pervasive it is within a business, and how much the employee experience and customer experience depend on it.

In fact, 80% of companies now consider themselves as competing on customer experience (CX), so the stakes for applying generative AI to content in CX are now very high.

So, what are the types of risk involved, exactly? While the specific risks can vary for each enterprise, the Content Science team and I have identified six main areas of generative AI risk for enterprise content. Better understanding these risk areas can help you and your fellow leaders make better decisions about your approach.

1. Lack of Transparency

Your organization’s approach to generative AI should factor in two aspects of transparency as well as documentation.

For Regulators

Legislation of artificial intelligence, including generative AI, has proliferated around the world. The European Union set the tone with its AI Act. In the U.S., legislation is emerging at federal, state, and even local levels. To stay on the right side of most of this emerging regulation, your organization has to be transparent about when and how its using AI. You’ll need to demonstrate, for example, how you’re not using AI instead of humans to make high-stakes decisions and how you’re use of AI does not introduce bias (see 4 below).

For Your Employees + Customers

Best practices so far indicate that employees and customers want to know when and why they’re dealing with AI. For instance, if your organization uses AI to support interviewing candidates, it’s best to explain the purpose and benefits to both the candidates and the employees who will conduct interviews. (For more about the impact of AI on hiring, don’t miss this in-depth guide we recently researched and created for our client partner Terminal.io.)

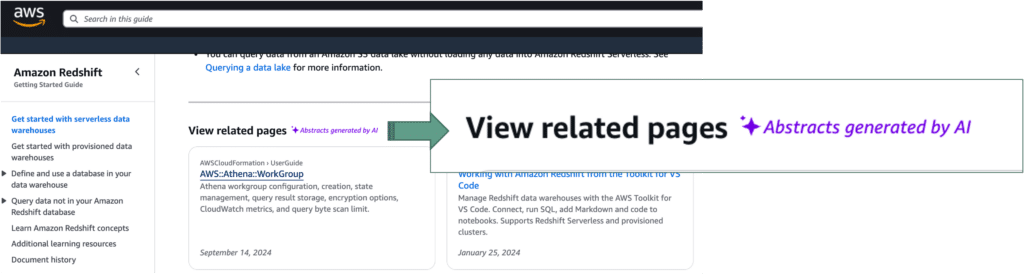

As another example, when your organization uses generative AI to provide content or recommendations, customers want to be aware. AWS does a nice job of showing in their interface when abstracts of related pages are generated by AI, as you can see in this visual:

Also factor in how your enterprise will make your application of generative AI clear in your terms of use or other policies communicated to customers, as Medium does here.

The Value of Documentation + Content Design to Transparency

To shore up any risks due to lack of transparency, documentation is crucial. Organizations that quickly deploy technical documentation and content design capabilities in their generative AI implementation will have an advantage.

2. Accuracy Problems

The longtime saying “garbage in, garbage out” is true for generative AI. What’s new with generative AI is how the garbage can get in. And the better your organization understands how, the more you can reduce the garbage and, therefore, prevent accuracy problems. The consequences of accuracy problems can range from undermining customer trust to public relations disasters to productivity messes to litigation.

Using Generative AI for Math

Generative AI is deep learning AI, which depends heavily on neural networks and large language models (LLMs). That works well for learning language. That doesn’t work well for math calculations. Plainly put, generative AI is bad at math and manipulation of numbers.

Not long ago, I did a search on a blood pressure reading I took at home to check whether it was in the normal range. Google’s AI Overview tried to answer, and unfortunately it answered incorrectly, as shown below and shared on LinkedIn here. The incorrect answer also mentioned Mayo Clinic, which drags an otherwise highly trustworthy brand into the mud.

So, if your enterprise wants to use generative AI in an experience that involves comparing numbers, simple math calculations, or something similar, chances are you’ll need to use additional technology to fill the math gap.

Garbage in the LLM

If the LLM in your generative AI has inaccurate or outdated content, then your organization is at risk of creating inaccurate or outdated content. And the chances of this risk happening are high now because trusted content sources ranging from The New York Times to Condé Nast are forbidding generative AI solutions from using them at precisely the time that these solutions need more quality content, not less, to meet increasing demand. Data Provenance Initiative, for example, recently noted a 50% drop in data (which in their definition includes content) available to generative AI technologies.

So, what can you do to minimize this risk? Get crystal clear about what goes into the LLM of the generative AI solutions you’re considering. Demand transparency before committing to any large-scale deployment.

A variation of this risk is drift, where the real world changes but your generative AI model doesn’t, so the content generated is no longer accurate. The only way to minimize this risk is vigilant maintenance, so demand transparency about maintenance from the generative AI solutions you might use.

Garbage in Your Organization’s Content + Data

To tailor generative AI for your enterprise, chances are you’ll need to train it on your own content, data, or both. But if that content and data don’t consistently meet your standards or are fraught with errors or lack structure that the LLM can use, you will put your organization at risk. In other words, the more content and data debt your organization has, the bigger the risk of accuracy problems in generative AI.

Our research indicates that organizations who report a high level of content operations maturity are better and faster at leveraging advanced technology including generative AI. Why? Because these organizations have practices in place—like clear standards and guidelines, effective governance, and integrated workflows—that prevent content debt.

The only way to minimize this risk is to resolve the content and data debt. There are many potential ways to do so, so you have options. The good news is an outside partner as well as other types of AI and tools can accelerate the process. For instance, our team at Content Science recently helped the world’s largest home improvement retailer define comprehensive content standards and guidelines for transactional communications across all relevant channels, from web to email to SMS. If that effort sounds big and complicated, that’s because it was. But we accomplished it in less than three months.

3. Copyright + Intellectual Property Gaps

Your enterprise should consider two aspects of copyright and intellectual property (IP) issues in generative AI. The consequences of copyright and IP gaps can range from being sued to losing competitive advantage.

Unwittingly Using Protected IP

Related to 2, if your organization leverages a generative AI solution that trained on protected IP, then the risk of generating content that violates that protection increases. To date, there are hundreds of IP lawsuits involving generative AI, and I don’t expect that number to decrease anytime soon. Wired is tracking the more prominent cases here. Demand transparency from generative AI solutions about their use of copyrighted or protected IP in their LLMs.

Leaking Your Intellectual Property

If your enterprise provides protected content or data to a generative AI solution without strict controls, that content or data could wind up in the solution’s LLM and, consequently, available to others using the solution. This is where precision in your agreements with generative AI solutions and in your implementation (defining processes, training teams in the processes, and more) become crucial. Organizations that are already mature in their content operations are in better position to be precise during generative AI implementation.

4. Bias Issues

As your enterprise explores implementing generative AI at scale, think about the risk of introducing bias in your content. The consequences of bias range from not meeting your organization’s commitments to equity, inclusion, or similar values to violating regulations of AI to worsening health inequities.

Biased Material in the LLM or Algorithms

Similar to 2 and 3, if the content or algorithms used by the generative AI solution have bias, you’re at risk. Again, demand transparency about the material, training, and maintenance for any generative AI solution you contemplate deploying at scale.

One potential blind spot in a generative AI solution is localization, or tailoring content for a culture or context beyond simple language translation. This can lead to bias problems such as making a culturally insensitive or contextually impossible suggestions. Juviza Rodriguez, senior director for consumer education at March of Dimes, explained how this bias can be disastrous for individual health decisions and for societal health goals, such as improving equity, in our Content Predictions and Plans series. Here is an excerpt.

AI may not pick up on idiomatic expressions or culturally specific phrases. Imagine an AI tool recommending culturally inappropriate treatments or reinforcing dangerous misinformation.

The result? Content that sounds unnatural or may be completely misunderstood by the target audience…exacerbating existing health disparities.

Lack of Inclusive Standards + Rules

If communicating with inclusive language is more ad hoc than mature and systematized at your organization, then chances are your enterprise lacks clear standards and rules with which to train generative AI. And you’re not alone. This gap is another common form of content debt that you must resolve to shore up your risk. As mentioned in 2, an outside partner can accelerate closing this gap.

5. Sustainability Problems

As your enterprise explores the risks of generative AI, think about its impact on your sustainability commitments and your content operations and systems.

Violating Your Sustainability Commitments

For many reasons, enterprises track and communicate publicly about their commitment to sustainability on their own websites and as part of international forums such as UN Global Compact. It turns out generative AI can throw a monkey wrench into this highly visible tracking and communication. That’s because generative AI solutions require vast numbers of computations and, as a result, huge amounts of energy. A generative AI training cluster might consume seven or eight times more energy than a typical computing workload, as MIT Review has noted. So, using generative AI at a large scale might change what your enterprise can claim in meeting sustainability goals.

Creating New Content Debt

If your enterprise implements generative AI successfully, you can create quality content faster than before. That could mean a quick proliferation of content assets to support everything from personalization to A/B testing to filling more content gaps in the customer experience. That’s great. But generative AI doesn’t manage those assets. Is your enterprise ready to manage and govern a deluge of content assets? If not, your organization will fall into content debt quickly, which introduces risks ranging from outdated content in the customer experience to an increase in costs for managing more content assets.

6. Lack of Effectiveness Measurement

Your organization risks making decisions about generative AI planning and implementation in the dark if it’s not measuring content productivity or impact. You also will find it tough to articulate ROI from your generative AI or digital transformation initiative. In a recent Gartner survey, 49% of participants reported that difficulty estimating the value of gen AI projects is an obstacle.

Gaps in Understanding Productivity Impact

For enterprises, the promise made by many generative AI solutions is more and better productivity. Yet, only about a third of organizations are measuring it for content work, as we have found in repeated research. How will you know whether generative AI is delivering on its promise if you can’t compare productivity with generative AI to productivity without it?

Last year, Pfizer transformed their go-to-market approach and, thanks in part to gen AI, accelerated production by 50%. (More about Pfizer’s content-led transformation here.) If they had not clearly measured content productivity before gen AI, Pfizer could not have been certain about the productivity gains and, consequently, the ROI.

Gaps in Understanding Customer Impact

As I mentioned at the outset, the stakes for content in customer experience are high. Most priority business goals or KPIs tie to the impact of content on customers. Does the content make a difference to their perceptions like trust in your brand and to actions like subscribing or buying? And does content generated by AI perform better or make more impact than content generated by humans? How can you optimize the content generated by AI? What insights can you use to better train the generated AI solution? I’m only scratching the surface here of what questions to answer with measurement. But if your organization isn’t already measuring content effectiveness, you’re at risk of wasting time and resources on an implementation that doesn’t achieve your goals.

So, use the slowdown in enterprise adoption of generative AI as an opportunity. Lead your organization into a smarter approach informed by better understanding of these six risk areas.

Events, Resources, + More

The Ultimate Guide to End-to-End Content

Discover why + how an end-to-end approach is critical in the age of AI with this comprehensive white paper.

The Content Advantage Book

The much-anticipated third edition of the highly rated book by Colleen Jones is available at book retailers worldwide. Learn more!

20 Signs of a Content Problem in a High-Stakes Initiative

Use this white paper to diagnose the problem so you can achieve the right solution faster.

Upskill with Content Science Academy

Training for modern content roles through on-demand certifications + courses or live workshops.

Comments

We invite you to share your perspective in a constructive way. To comment, please sign in or register. Our moderating team will review all comments and may edit them for clarity. Our team also may delete comments that are off-topic or disrespectful. All postings become the property of

Content Science Review.